Spark之submit任务时的Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

Spark submit任务到Spark集群时,会出现如下异常:

Exception 1:Initial job has not accepted any resources; check your cluster UI to ensure that workers are registered and have sufficient memory

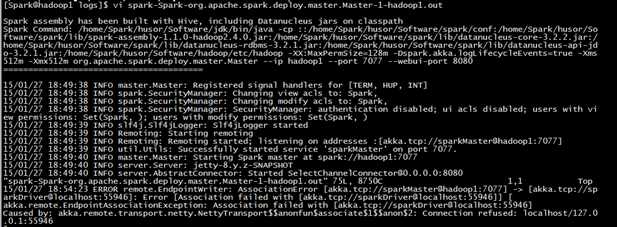

查看Spark logs文件spark-Spark-org.apache.spark.deploy.master.Master-1-hadoop1.out发现:

此时的Spark Web UI界面如下:

Solution:

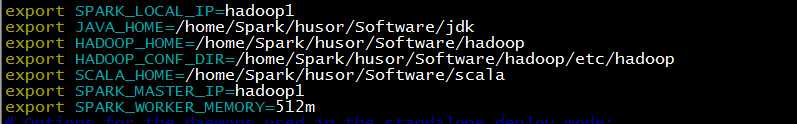

修改Spark配置文件spark-env.sh,将SPARK_LOCAL_IP的localhost修改为对应的主机名称即可

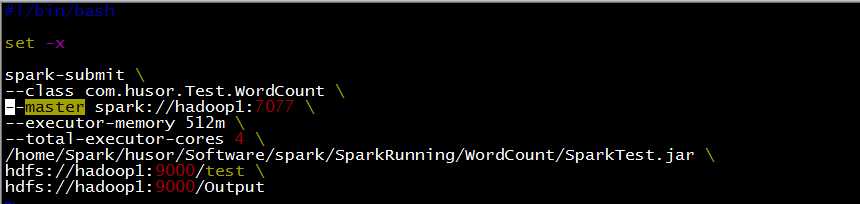

提交任务(WordCount)到Spark集群中,相应脚本如下:

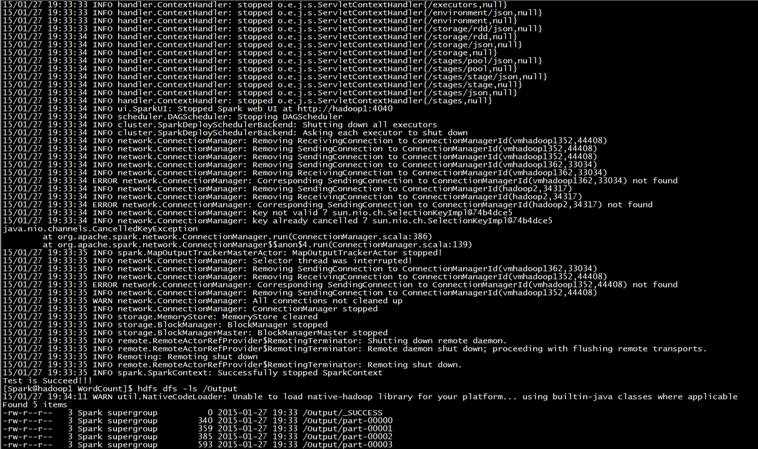

执行脚本,运行Spark任务,过程如下:

./runSpark.sh

文章来自:http://www.cnblogs.com/likai198981/p/4252453.html